Prof. em. Aant Elzinga, University of Gothenburg

Paper given at workshop on evidence-based practice, University of Gothenburg, 19-20 May 2008

Introduction

Science policy as a separate policy domain emerged after the Second World War and it became institutionalized under the influence of OECD (Godin 2003, 2005 & 2006:654-655; Elzinga 2005). Important distinctions like the one entailed in the acronym R&D adopted in the early 1960s for comparative statistical purposes are still with us today, although now intermediate categories like mission oriented (basic) research, strategic research, frontier research (term used in Europe) and transformational research (term appearing in the U.S.) get considerable attention. Unless on subscribes to some species of idealism or essentialism, strictly speaking the term basic or fundamental research has no meaning outside its use as a statistical household word: in other words it is historically and socially contingent and sometimes regarded as a contested term (Stokes 1997; Godin 2007:28-43). There is a sociopolitics (Godin 2002) that drives S&T measurement within nation states in accordance with particular conventions together with rules of standardization codified within the intergovernmental frameworks of either the OECD (for the rich world) or UNESCO (for the so-called developing nations).

Another important distinction introduced in the early years was the one between policy for science and science for policy (Elzinga and Jamison 1995). The former has to do with the stimulation of research to develop an advanced knowledge base while the latter is what we now associate with sectorial research policies (Elzinga 1980). Under the head of science for policy one can also include various functions and mechanisms for science advice to government. Early on science advisors were often physicists, today the accent has shifted towards persons with a background in biomedical and biotechnological fields. In some cases systematic methods of reviewing the literature have been developed to provide decision-makers and international organizations with a science-base for important policy decisions. The most visible example today is the Intergovernmental Panel on Climate Change (IPCC) and its periodical reviews with lead authors and teams producing chapters in a comprehensive report regarding trends in global climate change (Elzinga 1996).

A rough analytical distinction made in connection with environmental research but also useful in other areas where controversies arise due to a combination of epistemic uncertainty and strong political stakeholder pressures suggests that in practice four possible options or policy advisory strategies are available to the scientist in his/her position vis a vis the halls of power: pure scientist, science arbiter, issue advocate, and honest broker (Pielke 2007). In the present paper I shall not be concerned with these aspects of evidence-basing of policy measures. Neither shall I rehearse various points in the debate regarding organizational changes in the research landscape and policy-related doctrines that have been depicted with trendy terms (or rather metaphors) like “Mode 2” and “Triple Helixes” (for that debate see Elzinga 2004). Instead the focus will be on the more classical task of policy for science, evidence-based methods for determining priorities and computer-aided modes of allocating resources out of the public purse to academic science. It is in this area a lot has been happening lately. Towards the end of the paper a broader perspective will be introduced with some reflections on situating current developments in this area by relating them to a general shift in public management philosophy and practices.

It will also be noted how evidence-based science policy in the forms currently being introduced in Sweden has a detrimental effect on the well-being of research in the humanities, which will be (and are already) systematically “miscounted” in as far as far as publishing patterns revolve around monographs instead of the production of international journal articles.

Goals, priority setting and incentive systems – from ideology to scoreboards

Research policy in its classical sense has to do with setting goals and priorities for R&D (Ziman 1994). The question of allocating resources to R&D activities is one of science policy’s most classical components. For the most part it is a matter of allocating resources out of the public purse at the national level, but it can also concern investments on regional or local municipal levels decided by actors at these levels. Funding of R&D by the European Commission through its Framework Programs and other avenues suggests that there is now also a supranational level, and here one finds administrators and planners hard at work with benchmarking and new sorts of science and technology (S&T) indicators to measure achievements and profiles.

Science policy also concerns different kinds of incentive systems to stimulate desired courses of development, for example tax reductions for firms to encourage reinvestment of profits into industrial research. There are also programs designed to foster collaboration between university researchers and extramural partners or users of research in industry and other areas (knowledge and technology transfer). In addition one finds practices of matching funding e.g., between academic units and firms, governmental agencies, or EU framework programs and the like. The state can also try to steer technological development via special procurement programs to jump start innovations in areas where there is otherwise no regular market, e.g., the military sector, but even within the hospital and healthcare sector where bio-compatible implants in human bodies is not necessarily a lucrative market. Sometimes there have been ideologies like ”picking the winners” (cf. Martin & Irvine’s now classic study with the same title) that have driven investment in new and emerging technologies (high tech) with an eye to the nation’s future competitive advantage in a global marketplace. The introduction of evidence-basing of policy in the UK has even in this area had the consequence of backing away from ideologically driven modes of science policy.

It appears then that much of the current discussion about suitable models for resource allocation to research has to do with a demand of accountability in terms that are quantitative and measurable. Helga Nowotny, whom I shall cite more below, has noted that even if the word ”evidence” is seldom used in science policy the philosophy nevertheless lies behind the attempts to construct metrics for fine-grained evidence regarding performance as a basis for decisions on continued financing in a part of the public sector.

It may also be noted that although the use of quantitative measures to evaluate research performance has increased enormously there is a dearth of literature with a reflexive take on the subject. Most reviews of the field have concentrated on bibliometric indicators and thus fail to cover the broader range of quantitative indicators, nor do they touch upon important issues of contextualization. The divide between science and technology studies (S&TS) on the one hand and the interdisciplinary field of science, technology and innovation (STI) on the other hand that has been institutionalized over the years may have contributed to a reinforcement of this situation. At least this is a conclusion one can draw from a review by an Australian research evaluation and policy group with broader ambitions (ARC Linkage Project 2005). Commenting on the lack of contextualizing studies the authors attribute the dilemma in part to an historical legacy where the “sociology of science, which should have provided important background information about relationships between indicators and the research process, has not been interested in quantitative indicators for more than 20 years. With its constructivist turn, researchers in the discipline questioned the validity of bibliometric indicators on very basic principles (Gilbert and Woolgar 1974; Woolgar 1991) and has since ceased to make contributions to the topic. A recent trend is the emergence of a body of literature produced by scientists from outside science studies who have become interested in performance indicators. This literature is scattered across a wide variety of fields and tends to be fragmented and non-cumulative, often with little reference to the literature that does exist.” (ibid., p. 4). One consequence of this most recent trend is that the relative and inter-subjective social character of research quality, a feature early on recognized by sociologists and historians of science and some leading authors in STI (Martin and Irvine 1983; Herbertz and Müller Hill 1995; Van Raan 1996), also tends to be forgotten, eclipsed by spontaneous forms of positivism and essentialism partly inherent within communities of medical, natural and engineering scientists themselves. The situation is not improved when, as in Sweden, political scientists of a traditional strain reinforce the view that scientific quality is something inherent in superior knowledge itself, a position that has been sharply criticized in an incisive meta-theoretical inquiry by Ingemar Bohlin (1998), a study informed by an analytical framework founded on contemporary S&TS.

First and second orders of evidence

The science policy discussion I home in on in this paper has to do with evidence of the first order, i.e., benchmarking and descriptions of states of affairs within publicly funded units with the help of numbers as well as qualitative assessments (e.g., using review panels) in a landscape that is subject to policy orchestration by incorporating cultures of compliance. Evidence-basing of policy in the sense one finds in medical clinical work or units responsible for social work would require a second step. Such a second step would entail systematic evaluations of the efficacy of actual policy measures and instruments in order to determine to what degree intended aims or goals are achieved, for example that the prioritized profiled areas of R&D progress in a suitable manner when seen from the combined point of view of quality enhancement and relevance.

Another example would be assessing the actual impact of policies aimed at increasing a country’s international economic competitiveness, or intensification of collaboration between industry and universities in priority areas. In my reading of science policy documents I have not been able to find credible examples of evidence-basing in such a (second order) meaning. The current presidential election campaign in the U.S., however, is generating some signals in the academic world suggesting that the Democratic presidential candidate Barack Obama may be a strong advocate of evidence-based science policy (Bhattacharjee 2008), meaning better “science for policy”. Some of his statements, made even long before the present campaign, are being contrasted to the practice of the Bush Administration that by comparison is seen to be ideological, particularly with regard to U.S. climate policy, but also in the field of health care (Oberlin blogspot 2008).

In order to be able to compare the result of an implementation of specific policy instruments and established goals one needs to start with a much more precise description of the existing situation that is the baseline from which one has to start. This is probably the reason why much of the talk about ”best practices” is still rather loose while a lot of emphasis is given to introducing ”benchmarking” that will give clearer points of reference to compare initial conditions before and final conditions after the implementation of a policy package. Therefore I think it makes sense to distinguish as I have above between a first and a second order of evidencing.

A second order of evidence concerns evaluation of science policy and its instruments. The first order of evidence on the other hand concerns situation-descriptors that are needed if one wants to tighten policy audits and facilitate future comparisons between conditions before and after the implementation of a policy or package of affiliated instruments. That is why the energy of planners today seems to be so much directed towards constructing and experimenting with fine-grained metrics that may be incorporated into decision-making related to annual budgetary allocations of resources to publicly funded R&D at universities and other institutions. This is evident in several countries where performance indicators are used as a basis for changing funding flows between universities as well as between faculties and research units within them.

A new acronym for science based policy (SciSIP)

Some of the current efforts afoot in order to develop a robust indicator-based knowledge base for decision-making in science policy now fall under the heading of a new acronym, “SciSIP” which rejuvenates the crystallographer and Marxist John Desmond Bernal’s old dream of a science of science to steer the growth of science (Bernal 1939; Elzinga 1988). The ultimate rationale for such efforts may be found in a statement by John Marburger III, science adviser to President Bush who ties it back to strong demands of public accountability regarding tax dollars and the generally diffuse policy of enhancing economic competitiveness of the nation in a global market. In the wake of the launching of the President’s American Competitive Initiative (ACI) Marburger (2006) proposed an agenda for a Science of Science and Innovation Policy, which has now become a new phrase in policy documents. He argued as follows. “Science policy makers tend to rely on economic models and data when they exist, but also employ ad hoc surveys and opinions offered by panels of experts. Science policy implementers are usually government employees and elected officials whose information comes from a variety of sources of varying degrees of visibility, with advocacy groups on the high end and science policy technocrats somewhere near the bottom. I would like to change this. I would like to have science policy tools that are so credible that their products are embraced by the advocates as well as the technocrats. I do not expect tools that approach the credibility of Newton’s laws or quantum mechanics, but I believe we can move the standards of science policy making and implementation closer to what already exists in the world of economic policy.” And further: “I am emphasizing models because they are essential for understanding correlations among different measurable quantities, or metrics.” (cf. also OECD 2006 and Marburger 2007).

Apart from stronger emphasis on measuring outcomes and impacts the intention is also to link micro and macro data sets and make indicators more directly science policy relevant. The foregoing has prompted the NSF Science Metrics initiative. “The eventual aim is to create a cadre of scholars who can provide science policy makers with the kinds of data, analyses and advice that economists now provide to various government institutions.” (Mervis 2006: 347 cited in Nowotny 2007:482). Proposals by a variety of scholars have already reached the NSF (the deadline for SciSIP proposals was March 18, 2008). The funding program is within the Directorate for Social, Behavioural & Economic Sciences (SBE). The FY 2008 competition emphasizes three areas: analytical tools, model building, and data development & augmentation (NSF 2008). Probably a number of STS & STI scholars will be getting grants from this program.

In the UK a system of review panels has long been used to evaluate and rank performance of universities and departments within them every five years. In December 2006 the government announced that a new system for the assessment and funding of research would replace that framework after the Research Assessment Exercise (RAE) of 2008. The new framework will as part of its approach produce robust UK-wide indicators of research excellence for all disciplines. This represents a move away from the old ”subjective” approach to RAEs towards more ”objective” methods based on publication counts and citation measures to gauge quality and impact, plus statistical counts of external research income and postgraduate student activity at universities. The new framework will operate with fewer and broader subject divisions than the RAEs. The full set of indicators for the science-based disciplines will be produced for the first time during 2009 and will begin to influence funding allocations from 2010-11. The indicators will be based on data averaged across several years. For the arts, humanities, social sciences, mathematics and statistics the operation will be phased in more gradually, at first complemented by information from peer review panels. This is because publication patterns in these areas do not match those of the science-based disciplines, as the relevant international databases on publications and citation frequencies do not give a representative picture.

The idea nevertheless seems to be that reviews and summations of relevant performance indicators will fully inform funding from 2014 onward (HEFCE 2007). The aim is to try and enhance the overall relative international level of performance (in comparison with other countries) represented by the country’s research base. As Helga Nowotny has pointed out concerning the report that pushes in this direction, ”Although the report does not carry the word ’evidence’ in its title, it is yet another example of evidence-based policy intended to replace the RAE… By devising systems to compare ’best practices’ at national, European and international levels, self-generating, performance-enhancing mechanisms are created. Their function is to orient towards goals and objectives that readily can generate ever new performance targets and changing objectives by absorbing existing performances…” (Nowotny 2007:482).

Nowotny who has first hand experience of policy making and science advice at the highest level within the EU and is presently Vice-President of the Scientific Council of the newly established European Research Council, for her own part, expresses skepticism and is critical of the science metrics approach. She warns against fastening in a reification of numbers and the associated myth of a “trust in numbers” on which it rests; therefore she calls for other, competing constructions of “policy rooms” distributed throughout the science and innovation systems. Perceptive users of bibliometrics and research performance indicators have also warned of inadvertent consequences inherent in too much trust in numbers (Weingart 2004).

A leading centre in Europe where bibliometric methods have been developed is the Centre for Science and Technology Studies (CWTS) at Leiden University, led by Anthony van Raan who is currently also the editor of the journal Research Evaluation. Another is the so-called Leuven-group, the Steunpunkt O&O Statistieken (abreviated SOOS), a Flemish inter-university consortium located at the University of Leuven and directed by Wolfgang Glänzel who also has longstanding affiliations with the Information Science and Scientometric Research Unit (ISSRU) at the Library of the Hungarian Academy of Sciences which is the co-publisher (with Springer Verlag) of the journal Scientometrics. The services of the CWTS unit at Leiden have frequently been used in bibliometric studies at Swedish universities. Medical faculties have also engaged Grant Lewison who has developed bibliometric competence at the Welcome Institute in the UK. In Sweden Olle Persson and his Information Research Group (started in 1975) at the Sociology Department of Umeå University has been working with bibliometric methods since 1985, publishing empirical and theoretical papers and engaged operationally in various commissioned evaluation exercises. More recently Ulf Sandström who is affiliated with Linköping University has over the years developed various bibliometric skills in connection with research evaluations and policy, among other at the policy unit of the Swedish Research Council (VR), and as expert consultant to the recent Resources Inquiry led by Dan Brändström. The Resources Inquiry in its report Resurser för kvalitet (SOU 2007:81) proposes a new model for the allocation of funding through direct appropriations to Swedish universities. While this model is currently still only the subject of deliberations, no parliamentary decision having been taken as yet, it has already prompted a flurry of activities at universities to speed up work in developing their own capacities to do computer-aided evaluations of research performance. These activities mostly engage bibliometricians connected to the university libraries.

The Norwegian model for inter-university (re-)distribution of national appropriations for R&D.

In Scandinavia there is currently some discussion regarding what is called the ”Norwegian model” for linking state decisions regarding budgetary allocations to university research to systematic reviews of performance. One of the architects behind the Norwegian model is Gunnar Siverstsen (NIFU/STEP in Oslo) who has helped devise a system whereby researchers at universities and colleges report relevant information about their publishing activities into what has become a national database managed by bibliometricians employed at university libraries. The model is quantitative and bibliometric. A scientific publication is defined by four criteria each of which has to be satisfied: 1) presentation of a new insight; 2) presented in a form that makes the results testable and possible to use in new research; 3) expressed in a language and via a channel of distribution that makes it accessible for most researchers who might take an interest in it; and 4) the publication channel (scientific journal, report series, book, etc,) that it appears in must incorporate peer review procedures (Sivertsen 2008). Publications distributed through local channels (if more than 2/3 of publications in a publication series coming from the same institution) or non-scientific channels (lacking peer review) are excluded. Publication channels are divided into two levels: Level 1: a category that covers ”normal” quality, where one usually finds 80% of publications in a discipline; Level 2: a category that covers the other 20% where one finds the most significant or highest quality publications, e.g., high impact international scientific journals. In case of a multi-author article the publication is divided into corresponding fractional parts attributed to the respective authors’ home institutions. A point system is used to give weights to different kinds of publications:

Publication points are calculated annually by multiplying the author-fraction affiliated with an institution times the appropriate vectors for publication form (species & levels). In addition to publication points three other indicators are combined in the result-based decisions for redistributing funds amongst universities and colleges with an eye to their final quality measure expressed on a national scoreboard. The other three indicators are first the number of doctoral degrees, secondly EU-funding attracted, and thirdly Norwegian Research Council funding attracted over the same period. The evaluation/measurement exercise was incorporated into the state budget for universities 2005 and is currently being extended to include the national research institutes sector and health-related enterprises.

Swedish experience and the proposal of a more “objective” model

Interested parties in Denmark, Finland and Sweden have been studying the Norwegian model and looking at alternatives in order to incorporate quantitative and bibliometric information as a basis for state budgetary allocations of funds to university and other forms of research in the public sector. Several Swedish universities are developing bibliometic functions to provide university boards with instruments to monitor quantity and quality of publications. The most comprehensive report until now is one from the University of Uppsala where consultants from the CWTS/Leiden were employed to do an extensive evaluation, Quality and Renewal. KoF07 Report (see UU home page). At the national level the White Paper called the Resource Inquiry (or Brändströmska utredning) on financing forms for universities’ activities (SOU 2007:81) reflects a certain enchantment with the British experiences with the RAE-system and the discussions regarding its replacement, at least in part, by “robust” indicators. The White Paper criticizes the Norwegian model for being too costly and cumbersome because it involves local university based staff to manage researchers self-reporting of publications into a national database that needs to be continually upgraded and validated. To circumvent this ”subjective” element the ”Swedish model” accordingly proposes a quasi-objective mode of measuring performance at an aggregate level that will allow a comparison of individual universities in this country. The methods proposed would only make use of existing information regarding publication counts, relative performance levels above or below a world norm calculated for 23 different classes of journals (systematically excluding journals in non-English languages) registered and indexed in the Thomsen/ISI database Web of Science (WoS). The characteristics of the model are:

1) the numerical value the model pins on a university is obtained by focusing on publications in given disciplinary areas;

2) calculating productivity by translating the actual number of publications to a virtual number of middling-level of averagely productive researchers that for each disciplinary-specific area would be required to produce the same number of publications;

3) calculating the citation-value by looking at the average number of citations received by the publications in question and dividing this by the expected compiled value (number of citations one would expect the corresponding number of middle-level averagely productive researchers to receive (field normalization));[1]

4) multiplying the productivity value with the citation-value for each disciplinary area for the various universities reviewed.

A position paper appended to the White Paper (Sandström & Sandström 2007b) argues that the advantage with this model is that one does not need to collect raw data from the universities. One gets the raw data directly from the database of the ISI/WoS. This procedure, it is argued, is much less costly than having to rely on universities’ databases that require competent staff to provide and manage local inputs that are constantly upgraded and validated. The other advantage emphasized is that application of techniques to achieve field-normalised indicators allows comparisons to be made across different disciplinary areas like technological science, medicine, natural sciences, social sciences & humanities as well as between sub-classes within these broad areas, something the Norwegian model is purported unable to do.

The foregoing line of argument also appears in some work of the aforementioned bibliometrics group at the Australian National University (see above). In 2007 two of this team revisited and evaluated the political science portion of the 2001 UK/RAE. The outcome of the original review panel assessment (RAE 2001) was compared with the results of a new evaluation for the year 2001 carried out by the Australian bibliometricians using only quantitative indicators accessible in international databases. The conclusion reported is worth citing here because it may have influenced the thinking of Ulf Sandström (cf. Sandström & Sandström 2007a) who is the main author of the model outlined in the Swedish White Paper. The authors of the Australian report (Butler & McAllister 2007: 14-15) write: “Our findings presented here suggest, unequivocally, that a metrics-based model, using objective, transparent indicators drawn from a range of readily-available measures, will yield results which are very close to those of a peer-based evaluation model /using review panels/. Such a stronger reliance on quantitative indicators, of which bibliometrics is a central measure, will, most importantly, obviate the need for a large peer review committee and the consequent indirect biases that it introduces into the system. And not least, such an approach would help to reduce one of the most oft-quoted criticisms of the RAE, namely the cost in university resources and academic staff time.”

Four prongs in a possible Swedish inter-university (re-)distribution key

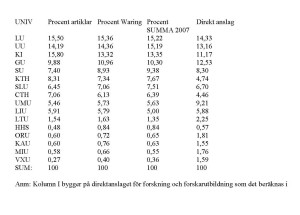

The main text of the Swedish White Paper however seems to shy away from adding citation frequency measurements as an additional indicator in the first round of funding using performance measures. Instead the idea is to give universities block grants, half of which will be stable while the other half will vary in accordance with the computation of an inter-university distribution key. The first prong of the distribution key is the number of teachers and researchers who have doctorates. It counts for 5%. Added to this is a prong for the number of women professors, which also counts for 5%. A third prong of the inter-university distribution key computes the ability of universities to attract external funding, which counts for another 20% in the new budget. The final 20% of budgetary allocations will be on the basis of the productivity indicator. A computation of the effect in the state budget allocations of the Fiscal Year 2007 to universities as derived from the fourth prong if the Swedish productivity and citation model were fully deployed shows that the Stockholm School of Economics (HHS), the Royal Technological Institute in Stockholm (KTH), Chalmers Technological University in Gothenburg, University of Lund, the Agricultural University (), Uppsala University and Karolinska Institutet would be winners, while on the losing end we would find the universities of Umeå, Gothenburg, Luleå, Linköping and many of the smaller universities. The latter either stand still or get less money. The two right hand side columns in the Table (SOU 2007:81, p. 415) indicate first the percentage of the national budget going to universities in line with the model’s recommendation (Procent Summa 2007), and then what the percentages actually are in the present system in operation today (direkt anslag). Within any given university also, the humanities are at a disadvantage since they do not figure as well in the international science citation databases as do the natural, technological and medical sciences. In the implementation of the model in Sweden there was a suggestion that articles in the humanities, since they are fewer, should be boosted by multiplying each with a factor of five. Since there were objections to this it was finally decided that a multiplier of two (2x) will be employed in counting performance in humanities disciplines. The joke however is that if a researcher published no article that is visible in the international databases, then zero times two (0x2) is still zero. Consequently it is it is not wrong to conclude that the introduction of evidence-based science policy entails a strong skew, a systematic miscounting of performance in the humanities that translates into perceivable disadvantages in terms of future funding to departments in these fields.

Tabell 6 Jämförelse i procent mellan antal artiklar, Waringvärden och

SUMMA sorterat efter SUMMA-värdet (kolumn H)

The Flanders model

The model proposed in Bilaga 8 of the Swedish White Paper has also been referred to as a modification of the Flanders model since it incorporates aspects of the bibliometric approach elaborated by the Leuven-group. Its task is to support research policy making in the Flemish part of Belgium. A central unit is situated at Leuven University. It involves a consortium of all Flemish universities and some government agencies, called Steunpunkt O&O Statistieken (abbreviated SOOS). The consortium wwas set up in 2002 to construct indicators for R&D policy purposes. Its task was to reconfigure the distribution of funds from a special portfolio for research (Bijzonder Ondersoeksfonds, or BOF) to the six Flemish universities (which amounted to 90 million Euro in the fiscal year 2002), making it more output oriented. Until 2002 the BOF-key consisted of three general prongs: the number of PhDs produced by the universities over a four year period prior to the year in which the computation is done. It counts for 50% of the distribution key. PhDs moreover are weighted on a scale of 1-3, so that one in physics it requires more instrumentation might weigh 3 points while a PhD in economics only weighs 1 point. 35% of the BOF-key was based on computing the number of graduates at each university during the same four-year period, and 15% of the BOF-key was based on something equivalent to the ability to attract external grants. Each university then received a fraction of the BOF-money in accordance with its relative share on the three indicators.

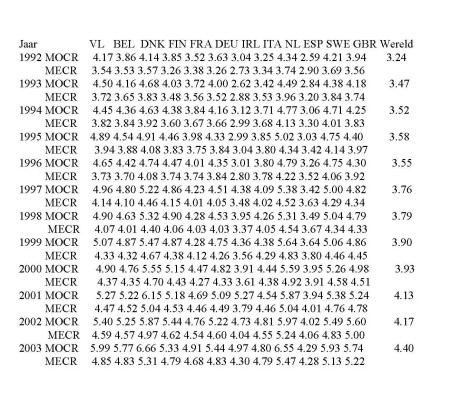

From 2003 onwards an additional criterium has been added to the distribution key: each university’s share in the total Flemish academic publication and citation output in the Science Citation Index (extracted from the expanded WoS/ISI Thomson) over a shifting period of ten years. Initially this indicator counted for 10% of the BOF key, but it is expected that in the coming years the proportion will grow to 30% while 70% will continue to rest on the older sets of indicator. At first a lot of data cleaning was needed to construct the Flemish WoS-SCI universe over the ten-year time window. This was validated with the help of the universities themselves by letting institutions do manual checks on its own downloads (48 000 “raw” publications filtered and distributed across the 6 universities), a process that led to further improvements as well as providing transparency and greater legitimacy in the eyes of those to be affected. Further refinement of the methodology is still going on. A question mark seems to remain regarding the usefulness of including certain areas in the humanities and social sciences since it is recognized that publications from these areas are insufficiently represented in the international databases. (Debacker & Glänzel 2004).

Tabel 8.7: Evolutie van de gemiddelde geobserveerde (observed average citation frequency -MOCR) en verwachte (expected citation frequency – MECR) citatiefrequentie voor Vlaanderen en elf Europese referentielanden (alle vakgebieden samen; bron SCIE)

Norwegian criticism of the Swedish White Paper prompts debate

In reply to the criticism of the Norwegian model found in the Swedish White Paper Norwegian authorities have pointed out that the Swedish investigatory group did not take contact with Norwegian experts to actually find out what the Norwegian model can and cannot do (Universitets- og Hogskolerådet i Oslo till Utbildningsdepartementet i Stockholm, brev av 6 jan 2008, ref. 08/4-1).[2] For example it is stated that contrary to the claim made in the Swedish report that the Norwegian model does not lead to a redistribution of funds that is disadvantageous to engineering sciences (more specifically NTNU in Trondheim).

Also the Swedish report claims that the indicator relating to completed doctoral dissertations rewards humanities doctorates with three-times as much money as medical doctorates. This is also shown to rest on a misunderstanding and insufficient knowledge about the workings of the actual Norwegian system. Further the Swedish investigators are criticized for giving a false picture of the changes in the inter-university distribution of money for the Fiscal year 2005 based on the model. The Norwegian experts also deny that the implementation of their model is very labour-intensive and therefore costly. The main bone of contention in the discussion that emerged after publication of the Swedish White Ppaper however concerns the question of transparency and therewith legitimacy of the redistribution of funds in the eyes of the researchers that are affected. Using input from researchers’ own self-reports on publication activities to their universities’ databases also gives the research community a sense of ownership over data for the assessment, an aspect that is absent in the Swedish model which may therefore be perceived as more alien (and alienating – compare the comments Nowotny and also Weingart above on reification of numbers).

The Norwegians argue that since the Swedish model that has been proposed makes use of very advanced and non-transparent methods for correcting quality measures relating to data sets for the different universities taken only from ISI/WoS sources it may be difficult to gain acceptance as to the model’s fairness as an instrument for reconfiguring future flows of research funds. In this context it may be noted that in the US recently there has been a revolt of scientists who are protesting against injustices caused by indicator-based rankings of universities, arguing that these are based on dubious methodology and spurious data, but nevertheless have a huge influence (Butler 2007).

The Danish Ministry responsible for Science has also closely studied both the Norwegian and Swedish (SOU 2007:81- Bilaga 8) models (Universitets- og Bygningsstyrelsen Notat 2007) and has made the following observations: ”The Swedish indicators take to a high degree their point of departure in impact measures such as are especially used in the health science, technological and natural sciences areas. Therewith the model will in the short term gain greater acceptance in these disciplines. By the same token it will meet greater criticism from social sciences and humanities than the Norwegian model. The Swedish model moreover uses rather advanced methods of estimation that however can be very difficult to see through and interpret (gennemskue og fortolke) by university leaderships as well as politicians. The very advanced Swedish impact-method chosen is at the cost of overview, legitimacy and being up-to-date (aktualitet) which /on the other hand/ are some central advantages on the part of the Norwegian model” (ibid.). The Danes therefore except that except for a few computational experts who do the estimations, the Swedish model will be a kind of ”black box”. Apart from its transparency the greater legitimacy of the Norwegian model is also found to derive from its inclusion of other publication channels than those indexed by ISI/WoS. For this and other reasons the Danes say they are more inclined to choose the Norwegian bibliometric setup and modify it for Danish purposes.

A further viewpoint relevant to the current discussion comes from Karolinska Institutet in Stockholm which otherwise does well on both productivity and citation scores compared to world averages in health and medical research. A recent evaluation of research in public health at Karolinska Institut in Stockholm covering thirteen departments and research centres has found some serious discrepancies when comparing data in the ISI/WoS and Medline databases with researchers’ self-reports of publications. The researchers’ self-reports gave a 25% higher figure for original articles and larger reviews than the number found in the international database. Thus 25% of significant publications lay outside the indexed population. Also, on the other hand, 25% of the works the researchers thought were not indexed could actually be found in KI’s library databases. The conclusion drawn was that even in the area of medicine, especially when one is looking at an inter- and trans-disciplinary field like ”public health research” it is necessary to complement information drawn from standard international databases with data reported by researchers themselves (Bondjers et al. 2008).

Systemartic Now I want to consider a broader perspective to make sense of onslaught of bibliometric methods and indicator reviews as a means of generating an evidence-base for policy.

Broadening the perspective: New Public Management and Audit Society

During recent times some of the discussion on science policy has come to incorporate the fashionable words “participation” (Elzinga 2008) and “governance” (cf. Hagendijk et al 2005; Guston and Sarewitz 2006) which link back to a perspectival shift in thinking around public management theory and practices. Thus there is a relationship – seldom taken up – between the current ”science of science and innovation” trend and the New Public Management (NPM) movement that had its heyday in the 1990s (Lane 1994; 1995). Underlying the new bibliometric methods to guide R&D resource allocations lies the philosophy of shifting from input- to output control, clearer contractual relationships between funder and performer (agencification, contractificiation) and making explicit a market of products (publications), their quality (citation levels) and economic rewards that are supposed to follow international recognition (symbolic capital). One can identify the same kind of redefinition of the relationship between those who finance research and those who perform it (agencification) as advocated by NPM thinking. An important element in the implementation of the new management philosophy when translated to the organizational domain has been to bring about changes in mechanisms for allocating funding and experimentation with the introduction of particular measuring instruments (or metrics) that allow decision-makers to evaluate more stringently what has happened as a result of the use of those resources. In general terms one can refer to a shift from a focus on inputs to a focus on outputs or outcomes. While the reigns on academic research are loosening on the input side methods of evaluation and assessment of outcomes are becoming more intrusive.

As already indicated, in the changed landscape public institutions are also to follow up and control the outcomes of investments at levels both of policy-making and implementation. Thus there is a shift from accountancy of resource inputs to evaluation and assessment of outcomes or outputs as a necessary corollary to the fundamental change in doctrine relating to accountability for public sector institutions. Former trust in the wisdom and non-opportunism of civil servants and professions is replaced by mistrust and thence a demand for external control by means of externally initiated evaluations and assessment or auditing procedures. Micheal Power and Richard Laughlin (1992) called this “accountingization” (“critical theory and accounting”, cited in Almqvist 2006:24). CHECK post-PMN lit (Hanne Foss Hansens ref, & Olaf Riepers ref “performance management” systems to be in place to be able to get certified in health etc….)

Accountingization

“Accountingization” refers to a strivance to make visible, break down and categorize costs in areas and endeavours where such costs earlier or traditionally were aggregated or more or less undefined. Steering, follow up and evaluation or auditing are thus emphasized, predicated on a buyer-seller or principal-agent contractual and cost-cutting nexus that replaces former trust in providers and administrators of welfare tasks to serve citizens or provide public goods. Citizens are no longer, they are now clients and consumers and we get privatization and commodification of former public goods including portions of scientific knowledge (qua intellectual property). Functions and mechanisms for dividing public resources are thereby supposed to move from the realm of politics to that of the marketplace.

Padoxically though, the quasi-markets are still created by political decisions. But now it is no longer called government but governance, i.e., a multi-level chain of delegations and decentralization in the process of orchestration and steering in tune with signals form the market. The new mode of accountability calls for reliable and hopefully fine-grained metrics of performance measures in terms of various quantitative indicators. Rewarding actors on the basis of measures of performance, some studies on health care have shown, however, may not lead to lower costs because the generation of some forms of health care that is not really necessary gets prioritized since profit-driven health care makes it competitively advantageous to encourage fairly healthy individuals to make quick extra visits to the doctor. This proves to be more profitable for the health care provider than does involvement with elderly patients with chronic illnesses, for example. The quest for profit gains thus appears to introduce a skew that is not counted in economic cost benefit measures.

Summing up – three main ingredients of the NMP narrative are: first of all competition, secondly, agencification, i.e., introducing contractual relationships, and thirdly, accountingization. A more complex schematism based on the review of the literature will include further key words.

Brand names and other fashions

A lasting influence of NPM is already the attention and energy that have been expended by universities to fine-tune mission statements, visions, making visible profiles and strategy documents. I think we all recognize the efforts of university bureaucracies during the past decades. Public relations and alumni fundraising are also part of this picture. It signifies further the cosmetic changes effected by new and fashionable conceptions of governance. Ultimately one has to ask how much will be ritual and how much will cut into the bone. Robust research institutions probably will have no difficulty under stricter accountingization regimes to turn new number-grinding exercises to their own advantage. It will become a natural ingredient in reputation management. Control of control as Micheal Power calls it is a ritual whereby in the situation where trust gets lost, the principal gets reassured by introducing a second order control, one wherewith the task becomes that of verifying if a system of control is in place (supposedly internalized in the working environment of the agent). One is no longer concerned with the actual detail or content of the performance per se, but rather in the existence of second order routines as a proxy. In Sweden one example of this is the procedure of quality assurance audits periodically carried out by the National University and Colleges Authority (Högskoleverket).

Control of control

Organization management>>internal audit>>external audit >>state inspection

Symbols of compliance (assurances)……………………….>>

<<………………………………………..Delegated control

The cynical view is the one expressed by Daniel Greenberg (2007a, also cf. his 2007b) in his article in Science where he suggests that the ultimate name for a typical university running with the trend of the times ought to be “The University of Avarice” (the article is illustrated with a nice cartoon). External relevance pressures, marketization, commodification of public goods and subsequent accountingization bring with them cultural changes in the hallowed halls of academe, to be sure.

– a culture of compliance

– a culture of profiling, corporate branding, trade-marking and conscious reputational management and identity management

Apart from orchestrated self-regulation via the inducement of a compliance culture one also by extension one gets practices of reputation management whereby institutions try to enhance their image. To do well in the universe of citation indicators publication behaviour in research communities, it has been speculated, may also undergo change to adapt to new computer-aided models for redistributing funds between competing universities and departments within them (Debackere & Glänzel 2004: 273-274). Reputation becomes a key asset on which providers trade. In our universities we see a manifestation of reputation management in the practice of trade-marking and brand names as well, and disputes about how the university’s traditional logotype should be modified to make it in tune with the times. According to identity consultants a logotype is much more than a pattern on a paper or letterhead, it is a symbol that embodies a metaphysical means to unify actors around a mission as well as attract customers.

Bibliography

ARC Linkage Project (2005), The Strategic Assessment of Research Performance Indicators – a Literature Review. Canberra: The Australian National University, Research School of Social Sciences, Research Evaluation and Policy Project (REPP) Discussion Paper 05/1, 84 pp. <repp.anu.edu.au/Literature%20Review3.pdf>

Almqvist, Roland (2006), New Public Management – om konkurrens, kontrakt och kontroll (Malmö: Liber).

Barzelay, Michael (2001), The New Public management. Improving research and policy dialogue (Berkeley: University of California Press).

Bhattacharjee, Yudhijit (2008), “New Focus – Democrat: Barack Obama”, Science, Vol. 319, No. 5859, pp. 28-29.

Bohlin, Ingemar (1998), Sken och Verklighet. Kvalitet inom Universitetsvärlden i Relativistisk Belysning. Stockholm: Byggforskningsrådets (BFR:s) vetenskapliga nämnds skriftserie.

Bondjers, Göran, Harri Vaino and Dag Thelle, The Karolinska School of Public Health – potential, visions, and implementation. Stockholm: Karolinska Institutet March 2008).

Butler, Declan (2007) ”Academics strike back at spurious rankings”, Nature Vol. 447, pp. 514-515 (31 May 2007).

Butler, Linda and Ian McAllister, “Metrics or Peer Review? Evaluationg the 2001 UK Research Assessment Exercise in Political Science” http://repp.anu.edu.au/papers/2007_ukresearchassess.pdf.

Debackere, Koenraad and Wolfgang Glänzel, “Using a bibliometric approach to support research policy making: the case of the Flemish BOF-key”, Scientometrics, Vol. 59, No. 2, pp. 253-276.

Elzinga, Aant “Science Policy in Sweden, sectorization and adjustment to crisis”,

Research Policy, vol. 9, no. 2 (April 1980), pp. 116 – 146.

Elzinga, Aant (1988), “Bernalism, Comintern and the Science of Science: Critical Movements Then and Now”, in Jan Annerstedt & Andrew Jamison eds., From Research Policy to Social Intelligence. MacMillan Press, London 1988, pp. 87-113.

Elzinga, Aant and Andrew Jamison: “Changing Policy Agendas in Science and Technology”,

in S. Jasanoff et al., (ed.): Handbook of Science and Technology Studies. Sage, London, 1995.

Elzinga, Aant (1996), ”Shaping Worldwide Consensus: the Orchestration of Global Change Research”, in Elzinga, Aant & Catharina Landström (ed.), Internationalism and Science,

London and Los Angeles: Taylor Graham, pp. 223-255.

Elzinga, Aant (2004), ”The New Production of Reductionism in Models Relating to Research Policy”, Symposium paper presented at Nobel Symp. at Sw Royal Ac of Sc Nov 2002, in Karl Grandin, Nina Wormbs and Sven Widmalm, eds., The Science-Industry Nexus. History, Policy, Implications, Sagamore Beach, MA: Science History Publications/USA

pp.277-304. Fulltext:http://www.csi.ensmp.fr/WebCSI/4S/download_paper/download_paper.php?paper=elzinga.pdf

Elzinga, Aant (2005), ”Scientific Community: Development of Science Policy”,

in Sal Restivo (ed.), Encyclopedia of Science, Technology and Society. Oxford: Oxford University Press.

Elzinga, Aant (2008), “Participation”, in Hirsch Hadorn, Gertude et al. (eds), Handbook of Transdiciplinary Research. Bern & Zürich, Springer Verlag + Business Media B.V.), pp. 345-360.

Gilbert, G.N. and S. Woolgar (1974), “Essay review of the quantitative study of science: an examination of the literature”, Science Studies, Vol. 4, pp. 279-294.

Godin, Benoit (2003), “Measuring sc ienc e: is there ‘Basic research’ without statistics?”, Social Science Information, Vol. 42, No. 1, pp. 57-90.

Godin, Benoit (2005), Measurement and Statistics on Science and Technology, 1920 to the Present, London: Routledge.

Godin, Benoit (2006), ”The Linear Model of Innovation. The Historical Construction of an Analytical Framework”, Science, Technology and Human Values, Vol. 31, pp. 639-667.

Godin, Benoit (2007), ”What’s Science? Defining Science by Numbers 1920-2000. Montreal: Project on the History and Sociology of S&T Statistics at the Université du Québec à Montréal. Working Paper No. 35

Greenberg, Daniel S. (2007a), “Science and Society: On the Road to Academic Greatness – A Parable”, Science Vol. 317, Issue 5843 (7 September), pp. 1328-1329.

Greenberg, Daniel S. (2007b), Science for Sale. The Perils, Rewards and Delusions of Campus Capitalism (Chicago: The University Press).

Guston, David and Daniel Sarewitz (eds) (2006), The Next Generation of Science and Technology Policy. Madison: University of Wisconsin Press.

Hagendijk, Rob P. et al. (2005), Report on the STAGE Project: Science, Technology and. Governance in Europe. <http://www.stage-research.net/>

HEFCE (2007), (http://www.hefce.ac.uk/pubs/circlets/2007/c106_07/).

Herbertz, H. and B. Müller-Hill (1995), “Quality and efficiency of basic research in molecular biology: a bibliometric analysis of thirteen excellent research institutes”, Research Policy, Vol. 24, pp. 959-979.

Hood, Christopher (1991), “A Public Management for All Seasons?” Public Administration, pp. 3-19.

Lane, Jan-Erik (1994), ”Will public management drive out public administration?”, Asian Journal of Public Administration, Vol. 16, No. 2, pp. 139-151.

Lane, Jan-Erik (1995) The Public Sector: Concepts, Models and Approaches (London: Sage 2nd rev. edn.

Marburger, John H. III (March 29, 2006):< http://commerce.senate.gov/pdf/marburger-032906.pdf>

Marburger, John H. III (April, 19 2007): <http://commerce.senate.gov/public/_files/Testimony_JohnMarburger_OSTP_ FY08JHMSenateCommerceTestimony_FINAL.pdf>

Martin, B.R and J. Irvie (1983), “Assessing basic research. Some partial indicators of scientific progress in radio astronomy”, Research Policy, Vol. 12, pp. 61-80.

Nowotny, Helga (2007), “How many policy rooms are there? Evidence-based and other kinds of science policies, Science, Technology and Human Values, Vol. 32, No. 4, pp. 479-490.

NSF (2008), (www.nsf.gov/pubs/2008/nsf08520/nsf08520.htm/

NSF (2007), Science of Science and Innovation Policy FY08 <http://www.nsf.gov/pubs/2008/nsf08520/nsf08520.htm>.

Oberlin Blogsopt (2008) “Barack Obama: Pushes evidence-based science policy”. <http//oberlinsciencelibrary.blogspot.com/2008/01>

OECD Blue Sky II (2006): <http://www.oecd.org/dataoecd/48/14/37483994.pdf>

Osborne, David and Ted Gaebler (1992), Inventing Government (Reading: Addison-Wesley Publ. Co.).

Pielke, Roger A. Jr., The Honest Broker. Making Sense of Science in Policy and Politics. Cambridge: University of Cambridge Press.

Power, Michael and Richard Laughlin (1992), “Critical theory and accounting”, in M. Alvesson & H. Wilmott (eds), Critical Management Studies (London: Sage).

Power, Michael (1997), From Risk Society to Audit Society”, Soziala Systeme, Vol. 3. H. 1, pp. 3-31.

Sandström, Ulf and Erik Sandström (2007a), “A metric for academic performanc e applied to Australian universities 2001-2004.< http://forskningspolitik.se/DataFile.asp?FileID=137>

Sandström, , Erik and Ulf Sandström (2007b), “Modell för beräkning av direktanslag till svenska lärosäten baserad på forskningsproduktion och citeringsgrad”, Bilaga 8 i Resurser för Kvalitet (SOU 2007:81), pp. 389-441. < http://forskningspolitik.se/DataFile.asp?FileID=149>

Sivertsen, Gunnar (2008), “Den norske modellen”, Forskningspolitikk, No. 1/2008, pp. 14-15.

Universitets- og Bygningsstyrelsen (2007), “Svensk model for bibliometri – I ett norsk og dansk perspektiv”, Copenhagen: Notat 12 Nov. 2007.

Stokes, Donald E. (1997), Pasteur’s Quadrant: Basic Science and Technological Innovation. Washington: DC: Brookings Institution Press, 1997.

Van Raan, A. F. J. (1996), “Advanced bibliometrics as quantitative core of peer review based evaluation and foresight exercises”, Scientometrics, Vol. 36, pp. 397-420.

Weingart, Peter (2004), “Impact of bibliometrics upon the science system: inadvertent consequences?, in H. Moed, W. Glänzel and U. Schmoch (eds), Handbook of Quantitative Social Science and Technology Research. Dordrecht: Kluwer Academic Publishers.

Woolgar, S. (1991), “Beyond the citation debate: towards a sociology of measurement technologies and their use in science policy”, Science and Public Policy, Vol. 18, pp. 319-326.

Ziman, J. M. (1994), Prometheus Bound. Science in a Dynamic Steady State. Cambridge: Univ. Press.

Appendix

Standard Bibliometric Indicators:

Used by UCWTS/Leiden to rank the100 largest universities in Europe (Anthony F. J. van Raan ”Bibliometric statistical properties of the 100 largest European universities: prevalent scaling rules in the science system”)

• Number of publications P in CI-covered journals of a university in the specified period;

• Number of citations C received by P during the specified period, without self-citations; including self-citations: Ci, i.e., number of self-citations Sc = Ci – C, relative amount of self-citations Sc/Ci;

• Average number of citations per publication, without self-citations (CPP);

• Percentage of publications not cited (in the specified period) Pnc;

• Journal-based worldwide average impact as an international reference level for a university (JCS, journal citation score, which is our journal impact indicator), without self-citations (on this world-wide scale!); in

the case of more than one journal we use the average JCSm; for the calculation of JCSm the same publication and citation counting procedure, time windows, and article types are used as in the case of CPP;

• Field-based worldwide average impact as an international reference level for a university (FCS, field citation score), without self-citations (on this world-wide scale!); in the case of more than one field (as almost always) we use the average FCSm; for the calculation of FCSm the same publication and citation counting procedure, time windows, and article types are used as in the case of CPP; we refer in this article to the FCSm indicator as the ‘field citation density’;

• Comparison of the CPP of a university with the world-wide average based on JCSm as a standard, without self-citations, indicator CPP/JCSm;

• Comparison of the CPP of a university with the world-wide average based on FCSm as a standard, without self-citations, indicator CPP/FCSm;

• Ratio JCSm/FCSm is the relative, field-normalized journal impact indicator.

Bibliometric indicators – definitions and usage at Karolinska Institutet

Denotation index

P Total number of publications

PISI Number of publications in Thomson ISI indices

PTJ Number of publications in top journals

Pf5% Number of articles among the top 5% most cited in the field, of the same age and

article type

p Relative share of publications

pf5% Top 5% – share of articles among top 5% most cited in the field, of the same type

and age

pu Uncitedness – share of uncited publications

px Co-authoring – share of publications co-authored with another unit

pw CEST field-based world share of publications

C Total number of citations

ci Number of citations to a single publication i

c Average number of citations per publication

fc Item oriented field normalized citation score average

Cf Total item oriented field normalized citation score

[ ]fc CWTS field normalized citation score (crown indicator)

[ ]lnfzc Item oriented field normalized logarithm-based citation z-score average

[ ]jc Journal normalized citation score

jc Item oriented journal normalized citation score average

[ ]jpc Journal packet citation score

cs Self citedness – share of citations from the own unit

μf Field reference value (field citation score) for articles of the same type, age and in

the same field of research

fμ Mean field reference value (mean field citation score)

τf5% Top 5% threshold value for the field; i.e. articles of the same type and age in the

same scientific field

μf5% Top 5% reference value for the field; i.e. articles of the same type and age in the

same scientific field

μf50% Top 50% reference value for the field; equals the median of the field

μj Journal reference value

h h-index

IISI ISI journal impact factor

If Journal to field impact score

ιf Field reference value for journals, based on a specified time window

Bibliometric indicators – definitions and usage at Karolinska Institutet

Activity indicators used in the recent review of Public Health research at KI

Scientific publications

(a) peer-reviewed original papers, major reviews

(b) non-peer-reviewed articles

Non-scientific publications

· books, chapters and monographs, such as dissertations, conference papers and proceedings, scientific-technical reports, project reports, etc.

· other material (newspaper articles, teaching materials, health information, basis of laws and regulations, policy documents and guidelines)

· electronic material (CD-ROM), papers and monographs on the web, databases

Other activity indicators

· scientific collaboration (meetings, conferences, scientific organizations, educational tasks, expert and advisory tasks, supervision of dissertations and assessments

· societal collaboration (expert and advisory tasks, governmental tasks- authorship of White Papers), media contact

[1] One combines measures of publication-class normalized publication counts with measures of the relative quality captured via proxy measures of relative impact of a university’s publications overall and within given classes of the perdiodical literature using standardized techniques that in each case display the analyzed unit’s level above or below what a relevant community of ”normal” researchers in the Nordic countries would achieve. Such a measure is arrived at by a mathematical-statistical formula and the outcome is expressed in a number that is either larger or smaller than the ”norm”. This number can then be used as a multiplication factor to adjust budgetary allocations to a given university upwards or downwards depending upon the ”quality” of its performance.

[2] I want to thank Gunnar Sivertsen of NIFU/STEP in Oslo for supplying me with documentation from both the Norwegian and Danish (see below) agencies that have commented on the Swedish model.

[…] Evidence-based science policy and the systematic miscounting of performance in the humanities (28/04/2009). Paper given at workshop on evidence-based practice, University of Gothenburg, 19-20 May 2008. […]

Thank you for a relevant overview on Aant Elzingas production. Admitted the blog is not too lively these days – but not alltogether dead aither. Take a look once in a while – I will get back with relevant news on the humanities in society.